|1.06Mins Read

The Power of Normalization vs. Denormalization

- Authors

- Name

- Abhishek Thakur

- @abhi_____thakur

Why Schema Design Matters

Database schema design is foundational to system performance, scalability, and feature development. A well-crafted schema:

- Accelerates product velocity by reducing technical debt.

- Adapts to growth, avoiding bottlenecks from 10K to 10M+ users.

- Prevents painful refactors by aligning structure with long-term goals.

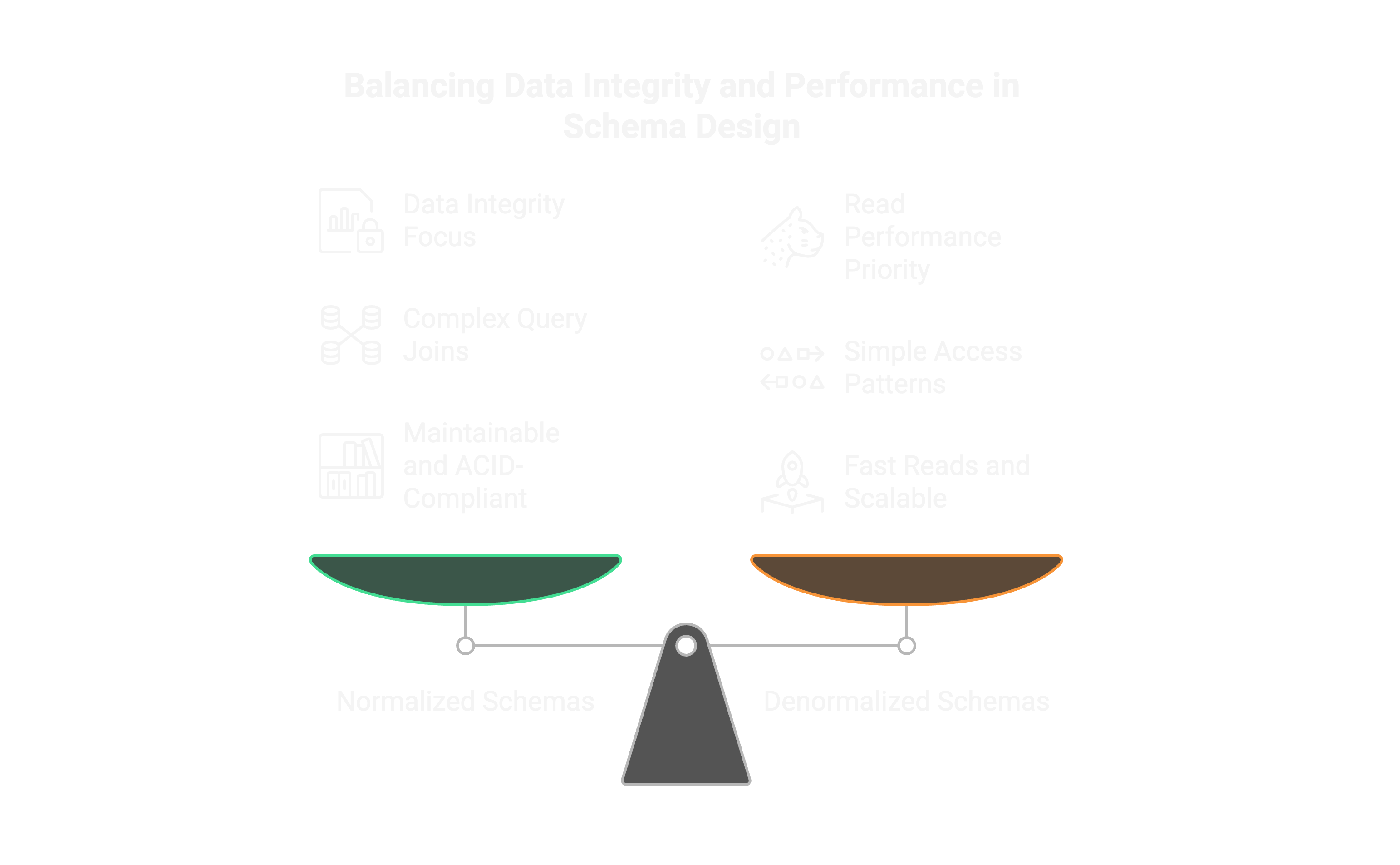

The Core Dilemma: Normalize or Denormalize?

Teams face a critical choice:

Normalized Schemas

- Prioritize data integrity and minimal redundancy.

- Ideal for transactional systems requiring consistency (e.g., banking).

- Enforce rules through normal forms (1NF, 2NF, 3NF, etc.).

Denormalized Schemas

- Optimize read performance and simplify queries.

- Trade-off: Potential redundancy for speed at scale (e.g., analytics dashboards).

- Common in NoSQL or read-heavy systems.

Navigating the Trade-offs

Normalization Strengths:

- Maintainable, ACID-compliant, and mutation-friendly.

- Weakness: Complex joins can slow queries.

Denormalization Strengths:

- Fast reads, simple access patterns, and horizontal scalability.

- Weakness: Updates require careful synchronization.

Key Takeaways

- No Silver Bullet: Use normalization for integrity, denormalization for performance.

- Hybrid Approaches: Many systems blend both (e.g., normalized writes + read-optimized caches).

- Evolution Required: Revisit schemas as usage patterns and scale change.

Next steps: Audit your current schema’s pain points—slow queries, rigid migrations, or redundancy—to guide your strategy.